A programmable programming language – The past as the future

Published on Sep 04, 2023 by Impaktor.

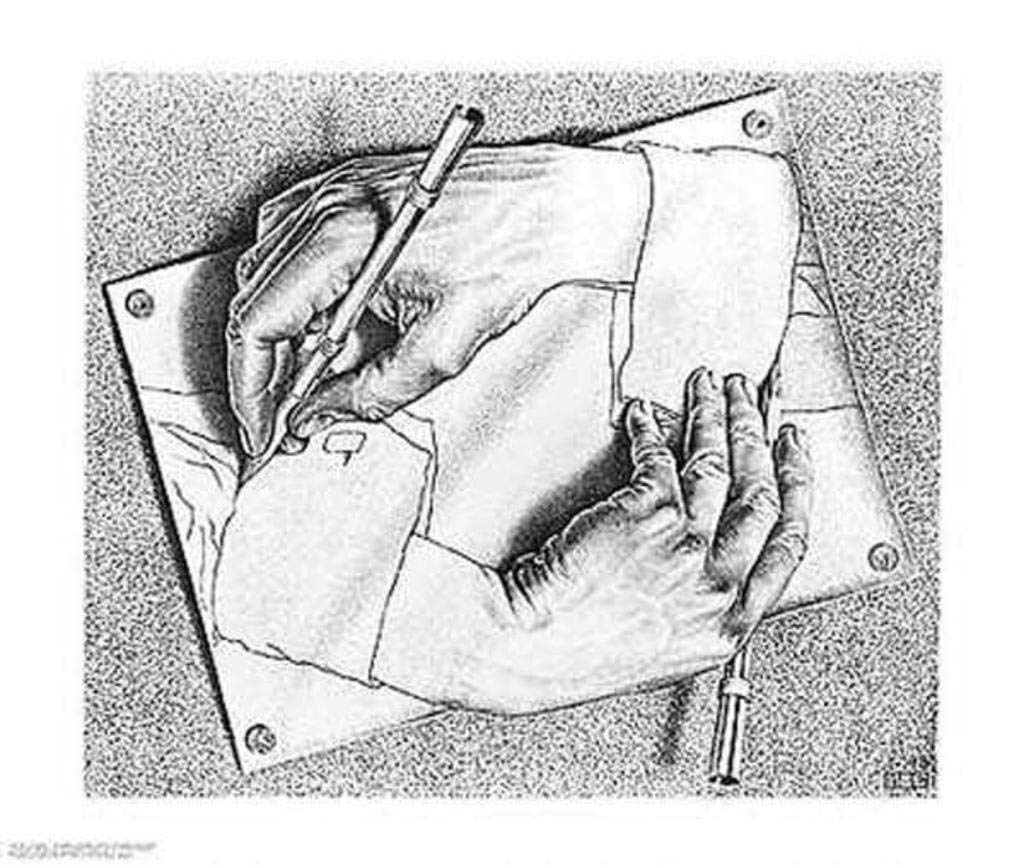

Figure 1: Lisp can be written in itself (M.C. Escher)

Table of Contents

- 1. Introduction

- 2. Reflections on “Growing a language”

- 3. Lisp Introduction

- 3.1. Why learn Lisp?

- 3.2. Introduction to Lisp

- 3.3. Recursion vs iteration

- 3.3.1. Example: factorial function in Python

- 3.3.2. Example: factorial recursive function in Python

- 3.3.3. Example: factorial recursive function in Lisp recursive process

- 3.3.4. Example: factorial recursive function in Lisp iterative process

- 3.3.5. Example: factorial recursive function in Python iterative process

- 3.3.6. Generate code as data

- 3.3.7. More: SICP - Structure and Interpretation of Computer Languages

- 3.4. Functions as first class citizens

- 3.5. On the peculiarity of Lisp syntax

- 3.6. Lisp speed compared

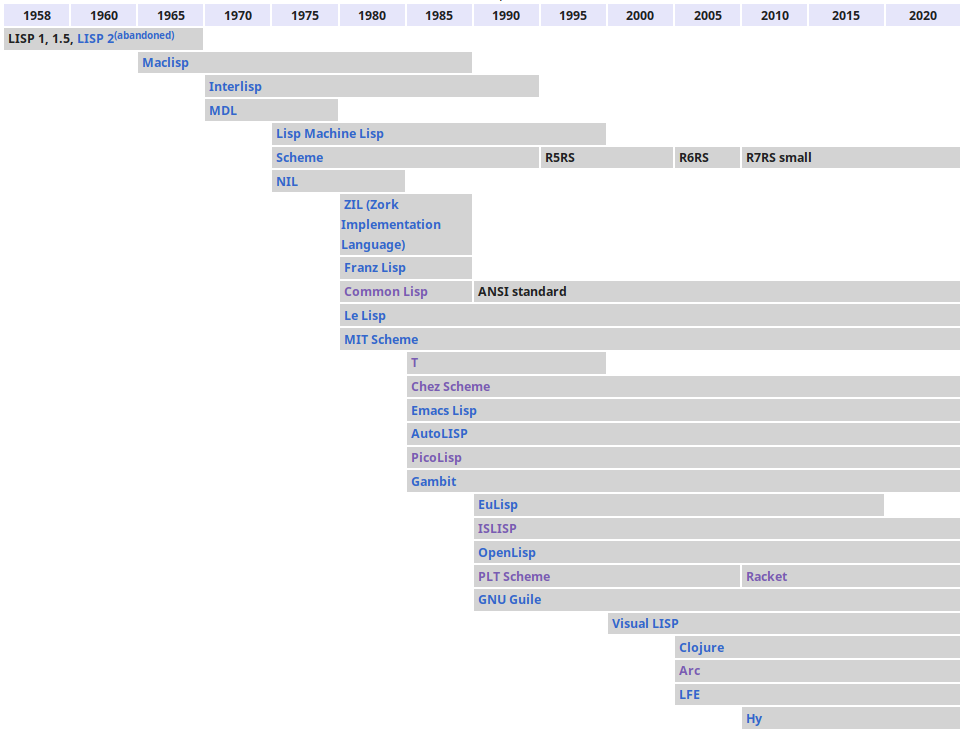

- 4. Lisp Family History

- 5. Metaprogramming

- 6. Macros

- 7. Lisp vs other languages

- 8. REPL (demonstration)

- 9. Appendix: Why it has Lisp not taken off?

- 10. Appendix: Resources

- 11. Appendix: Supporting material

1. Introduction

This is a combination of write-up & presentation (using org-present), thus the many small/short sub-sections, essentially making up one “slide”.

1.1. Presentation abstract

Programming languages teach you not to want what they cannot provide

Paul Graham

A presentation on what makes a programming language powerful with aim to make Python programmers see colors they have never seen before, motivating them to go beyond Python - which will soon be highly relevant with the advent of Mojo. We will look at some of the expressive limitations of Python, and how this is mitigated in other languages. We aim to demonstrate macros / meta-programming, meaning code that writes code. We hope to pique the interest of the reader to venture further to widen their horizons. Warning:

- This is not a talk on Mojo, but rather on features Mojo will/aught to have.

- There will be Lisp… but we will not teach it, only preach it.

1.2. Motivation – Why this talk?

Computer languages differ not so much in what they make possible, but in what they make easy.

Larry Wall

Exclusively using the same programming language, such as Python, risk calcifying the way we think of how to solve a programming problem.

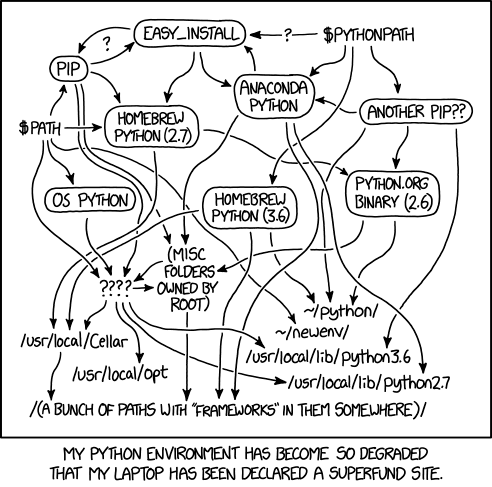

There are several restrictions in Python that irks me:

- Python programming is an exercise in writing as little actual Python code as possible, and instead rely on external modules written in C/C++/Fortran, to combat how slow it is. One could argue this is “Feature not a bug”, as Python is the glue to wield the power of modules.

- Lacking in expressive power, can not abstract syntactic patterns (metaprogramming).

Python keeps breaking backwards compatibility, leading in to versioning hell, as new versions of packages and the language itself breaks working code.

Figure 2: xkcd.com/1987

1.3. Taxonomy of programming languages

It seems to me that there have been two really clean, consistent models of programming so far: the C model and the Lisp model. These two seem points of high ground, with swampy lowlands between them. As computers have grown more powerful, the new languages being developed have been moving steadily toward the Lisp model. A popular recipe for new programming languages in the past 20 years has been to take the C model of computing and add to it, piecemeal, parts taken from the Lisp model, like runtime typing and garbage collection.

Paul Graham - The Roots of Lisp

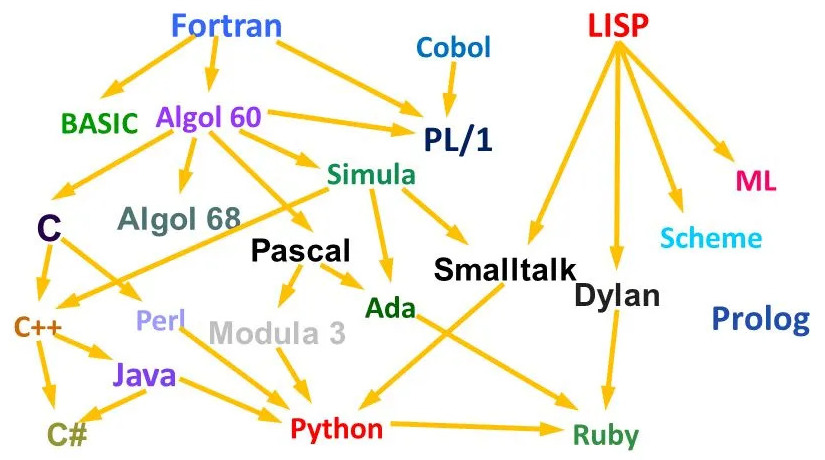

1.3.1. Historic time line of (some) languages

Notable programming languages

- 1957 FORTRAN-1 (Formula Translating System) by IBM

- 1958 LISP (List processing Language) by MIT

- 1958 ALGOL (Algorithmic Language)

- 1959 COBOL (Common Business Oriented Language)

- 1964 BASIC (Beginner’s All-purpose Symbolic Instruction Code)

- 1972 Smalltalk, by Xerox

- Algol derived:

- 1970 Pascal

- 1972 C, by Unix

- 1979 C++, by Bell Labs / Bjarne Stroustrup (aka “C with classes”, until 1983)

- 1995 Java, by Sun Micro systems

- 1991 Python 1, by Guido Van Rossum

- 2000 Python 2

- 2008 Python 3

The first high level programming languages FORTRAN, COBOL and LISP are still in use today, although for different reasons: the former two for maintaining old code on legacy systems, where as the latter because it is still offering what other languages still lack.

1.3.2. What languages fix

Many languages can be described surprisingly well with a single sentence, as noted by Kevin Kelleher, motivating why they sprung into being in the first place, and justifying their use:

- ALGOL (1957)

- Assembly language is too low-level.

- FORTRAN (1958)

- Assembly language is too low-level.

- LISP (1958)

- Turing Machines are an awkward to describe computation

- Scheme (1975)

- MacLisp is a kludge.

- Common Lisp (1984)

- There are too many dialects of Lisp.

- COBOL (1959)

- Fortran is scary.

- Simula (1962)

- Algol isn’t good enough at simulations.

- Pascal (1970)

- Algol doesn’t have enough data types.

- Smalltalk (1972)

- Not everything in Simula is an object.

- C (1972)

- Assembly language is too low-level.

- C++ (1979)

- C is too low-level.

- Java (1995)

- C++ is a kludge. And Microsoft is going to crush us.

- C# (2000)

- Java is controlled by Sun.

- Perl (1987)

- Shell scripts/awk/sed are not enough like programming languages.

- Ruby (1995)

- Perl is a kludge, and Lisp syntax is scary.

- Python (-91, -00, -08)

- Perl is a kludge.

1.3.3. Fun fact

The code seen in Terminator (1984) is COBOL and (Apple-II 6502 machine code)

In May 1984 Issue, In my 73 Magazine column was comparing four popular programming languages of the day: BASIC, Pascal, PL/I, and COBOL. I had implemented the same simple algorithm, an iterative method to sum a series of consecutive integers from 1 to 1000, in each language. The punch line came when I showed the best way to do this was to use Gauss’s famous method; it’s not the programming language, it’s the algorithm that makes computers run fast. (COBOL took 22.45 seconds to sum the numbers between 1 and 1000 on a Z-80.)

Figure 4: src.

1.4. A continuum of programming languages

Languages fall along a continuum of abstractness, from the most powerful all the way down to machine languages, which themselves vary in power.

Paul Graham - Beating the Averages

Figure 5: Fields of science on a continuum (xkcd.com/435)

Not all programming languages are created equal, but fall on a “power continuum” of how expressive they are.

- A programmer recognizes which languages are “below” his language: “That language doesn’t even have feature X”

- When same programmer looks “up” the power continuum, he does not realize

he is looking up, instead he sees known features plus “strange bits”:

“I’ve never needed to use that”.

- BASIC

- “why would I ever need recursion?”

- C

- “Why would I ever need objects?”

- C++

- “Why would I ever need macros?”

Learning new languages higher in the continuum, helps to become a better programmer in general, as one can clearly see what is lacking in the lower languages.

The language “highest” in the spectrum is generally considered to be Lisp. It is the language that all other useful languages converge towards.

despite the flowery phrase that “all the programming languages converge to lisp”, it is factually accurate that all programming languages have been copying lisp since its inception, and we have every reason to believe will continue to do so going into the future.

1.5. Future of programming languages – Convergence towards Lisp

If you look at these languages in order, Java, Perl, Python, you notice an interesting pattern. At least, you notice this pattern if you are a Lisp hacker. Each one is progressively more like Lisp. … It’s 2002, and programming languages have almost caught up with 1958.

Paul Graham - Revenge of the Nerds

- JavaScript

- “JavaScript is Lisp in C’s clothing” (takes closures, scoping, high-order functions, and lambdas from Scheme)

- C++

- Now has lambda & tries to duplicate Lisp macros with

constexpr(eval function at compile time) &consteval - Julia

- Has a Lisp inspired macro system

- Rust

- Heavily inspired by Lisp’s (Scheme’s) hygienic macro system

- Zig

- C/C++ replacement language. Tries to mimic Lisp’s macro system

- Nim

- “As fast as C, as expressive as Python, as extensible as Lisp.”

- Python

- Lisp (Scheme) macro system considered for Python PEP638

- Mojo

- Superset of Python, for AI/machine learning, x 30k speed up

I’ve done a lot of work in both Python with Scipy/Numpy, and Julia. Python is painfully inexpressive in comparison. Not only this, but Julia has excellent type inference. Combined with the JIT, this makes it very fast. Inner numerical loops can be nearly as fast as C/Fortran.

Expanding on the macro system, this has allowed things like libraries that give easy support for GPU programming, fast automatic differentiation, seamless Python interop, etc.

1.6. Legacy of Lisp

We have previously spoken of the convergence towards Lisp. Some of the features of Lisp, are:

- First interpreted language (can be Interpreted step by step, rather than compiled)

- Conditionals (

IF-statements) - Functions as first class citizens (more on this later)

- Lambda functions (C++11, Python)

- Recursion (more on this and tail recursion later)

- Garbage collection

- Mix-ins (Fun fact: terminology from Ice Cream shop, see p. 457 PAIP)

- Dynamic typing (all variables are pointers to values, values have type, not variables)

- REPL e.g. other languages executes out of process via system shell

- Map / filter / apply / reduce

1.7. Mojo – What is to come

Mojo (same creator as LLVM and Swift) combines the usability of Python with the performance of C, unlocking unparalleled programmability of AI hardware and extensibility of AI models.

Among the future languages that might be of special interest, is Mojo that is a super-set of Python (as C++ is unto C, or TypeScript is to JavaScript). Thus, the user can write in usual Python syntax, and import Python modules, or scale all the way down to the metal.

It boast of 30,000 x speed up.

File extension: .mojo (or alternatively “fire emoticon”).

It is supposed to support metaprogramming, inspired by Lisp.

1.7.1. Variable declaration: var & let

Optimize speed and error checking

var data: Int = 42 # mutable let pi: F64 = 3.14 # immutable

1.7.2. fn and def & Progressive types / strong type checking

Mojo introduces a second function definition: fn, that is strongly type

checked, i.e. type must be provided:

fn adder(x: Int): -> Int

x += 1 # error! parameters are immutable

return x

fn adder(x: Int, y: F32): -> Int

"Overloading"

return x + y

Note: Although you can declare types in Python, is only for the linter (like mypy), the interpreter does not know anything about types, which is also why you can not overload functions in Python, depending on input type.

fn is a more pedantic and strict form of def:

- Arguments are immutable.

- Arguments require type (except

self). - Empty return type implies

: -> None. - Can still use dynamic type, but static essential for optimized performance.

- Allows for function overloading (like C++).

- Can define ownership (similar to Rust),

owner,inout,

1.7.3. struct (like class)

struct is like Python’s class, but static, and can use fn instead of

def, and enforce var=/=let declarations

struct MyClass:

var data: Int = 42 # mutable

let pi: F64 = 3.14 # immutable

fn __init__(self&, x: F32):

pass

Note: We can use def in a struct and fn outside a struct (might change?)

1.7.4. Memory management

Similar to Rust and Swift, it can declare

inout,borrowed,ownedon input parameters.fn take_ptr(owned p: UniquePointer): print("take_ptr") print(p.ptr) fn use_ptr(borrowed p: UniquePointer): print("use_ptr") print(p.ptr)Similar to C/C++ it has memory management with pointers.

var data = Pointer[int]

1.7.5. Python compatible

Can interoperate with Python modules:

from PythonInterface import Python let np = Python.import_module("numpy") array = np.array([1,2,3])

1.7.6. Autotuning to hardware

- MLIR

- Multi level intermediate representation, scales to exotic hardware types

- SIMD

- Single Instruction Multiple Data type as first class object, where single instruction can be executed across multiple elements in parallel on the underlying hardware. I.e. vectorization.

- Autotuning

- optimize code for hardware, e.g. tile size

- Parallelize

- method that in example speeds up Python from roughly X1000 for single threaded Mojo to X 26k times relative Python implementation.

Example from their website demonstrates matrix multiplication, where Mojo is 14 times faster than native Python, and with autotuning and slight code restructure and tiling (cache locality & data reuse), we get 4,000 times faster. In a second part, they demonstrate getting 26,000 speed up. (Third and final part not released at time of writing this).

1.7.7. Making a new language

- We have seen that Python has limitations.

- To overcome these a new language is being built.

- This raises the question: what is the best way to build a language?

2. Reflections on “Growing a language”

In 1998, Guy Steele gave a now classic talk at the OOPSLA Conference, titled Growing a Language (vid). In it, he notes:

[…] should you use a small or large language? No, use one that can grow. Leave choices open, so other people can make them later. Huge programs can not be coded from scratch all at once, there has to be a plan for growth. Modern programming languages are now so big they can not be built all at once

2.1. Example of language development

Consider development of several popular languages:

- Python 1 -> Python 2 -> Python 3, or

- Fortran 77 -> Fortran 90 -> Fortran 2023, or

- C -> C++,

Each move risk incurring breaking changes, marking a considerable shift, requiring large effort and collaboration from language developers.

2.2. A better way

Programming languages should be designed not by piling feature on top of feature, but by removing the restrictions and limitations that make additional features seem necessary

R7RS.pdf, (2013)

- New functionality added to the language by the user should be indistinguishable from functionality added by the language designers.

- Many users would add new functionality, experiment, according to each persons need.

- This is what macros in Lisp provide.

We can view programming language as a language of words. If new words defined by the users look like primitives, and if all primitives look like words defined by the users, then a new language would grow dynamically, based on the users experimentation and needs, that is larger, without any seams. Many users would implemented new language features, and share their experimentation, growing the language fast. Such is the possibility in Lisp.

Guy Steele, “Growing a Language” (21:00-)

2.3. Example from Java

A Lisp programmer who notices a common pattern in their code can write a macro to give themselves a source-level abstraction of that pattern

Peter Seibel - PCL (chapter 7)

Consider a Java programmer that notice a pattern in their code, e.g. the

“enhanced” for-loop of Java 1.5 added an (JSR-201 2002-2004):

- Programmer detects pattern.

- Convince Sun Microsystems to add source-level abstraction of pattern.

- Sun has to publish a JSR & convene industry-wide “expert group”

- Compiler writers need to update compiler to support new feature

- Programmers probably not allowed to use new feature for along while (break backwards compatibility with older versions)

So an annoyance that Common Lisp programmers can resolve for themselves within five minutes plagues Java programmers for years.

Peter Seibel - PCL (chapter 7)

2.4. “Worse is better”

On a related note, the now classic article “worse is better”, by Richard P. Gabriel, argues striving for perfection (the “MIT/Lisp”-way) can hamper development, compared to just getting the ball rolling, to quickly fill a niche (“New Jersey/C”-way), and by so doing, growing the user base and number of contributors who can iteratively improve the project, to become “less worse” over time.

2.5. Compilation – From source to execution

To work towards how s program can modify itself, we shall first consider how code gets evaluated and executed. Compilers translate high level source code to low level machine code, specific for the hardware.

2.6. Compiling (e.g. C, C++, Lisp)

By compiling the source code it is translated by a compiler to (binary) machine instructions that are loaded into memory. This is then executed on the processor. The compiler is specific for the hardware.

+------+ +----------+ +---+-----+

| src +---->| Compiler +--->| Machine |

| code | | | | code |

+------+ +----------+ +---+-----+

|

|

+-------+ +----------+ |

| CPU | | Memory | |

| | | | |

| |<---| | <------+

| | | |

| | | |

+-------+ | |

+----------+

This is typically what happens when we compile C, C++, Fortran, or Lisp code.

2.7. Interpreter (e.g. Python, Lisp)

Python is both an interpreted and compiled language. That means we are not loading compiled Python machine code to memory directly, but rather the Python binary itself. This Python interpreter consists of two components: Python Virtual Machine (PVM), and a compiler. Code is compiled to (binary) byte code (not machine code), that is then interpreted by the PVM, that runs it.

+-------------+

| Memory |

|+-----------+|

||Python ||

||Interpreter||

||+---------+||

+------->||| PVM |||

| ||+---------+||

| ||+---------+|| +------+

| +-----+|| Compiler|||<------+ src |

| | ||+---------+|| | code |

| | |+-----------+| +------+

| | | |

| +---->| Byte code |

| +------+------+

| |

+---------------+

2.7.1. Run and inspect Python bytcode

In Python, we can inspect this process, e.g. if we only want to generate

the byte code, to folder __pycache__, we can run:

python -m py_compile <source.py>

The compiled file can still be run, using the Python interpreter:

python __pycache__/<source>.pyc

Furthermore, we can disassemble it, to look at the instructions:

python -m dis <source.py>

2.7.2. Python Interpreters

Python, unlike may other languages, has one single/standard implementation interpreter (CPython) which serves as a reference. However, there are some alternatives:

- Pypy

- Python written in Python. (Eventually faster, with up time).

- LPython

- High performance typed Python, with C/C++ backend.

- Jython

- Python (2.x) running on the Java Virtual Machine (JVM).

- Brython

- Generating JavaScript from Python.

- MicroPython

- For micro-controllers.

- Pyodide

- CPython ported to web assembly.

(Note: Do not confuse CPython with Cython, the former being the reference impelemntation by Guido van Rossum implemented in C, the latter being specialized Python compiler, for Python code targeted specifically for this compiler).

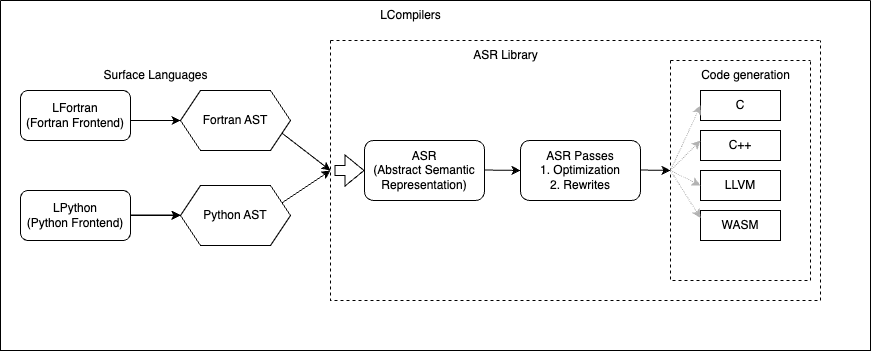

2.7.3. LPython - same speed as C/C++

Of note, is LPython, that compiles typed Python code to optimized executable binary, using C/C++ backend, or WebAssembly, i.e. giving equal speed to Python code as C++. LPython does allow seamless interoperability with CPython libraries, to “break out” to e.g. Numpy, PyTorch, etc. but then run at “slow” normal/CPython speed.

Figure 6: LPython is parsed to Abstract Syntax Tree (AST) which is transformed to a generic Abstract Semantic Representation, using LLVM. src

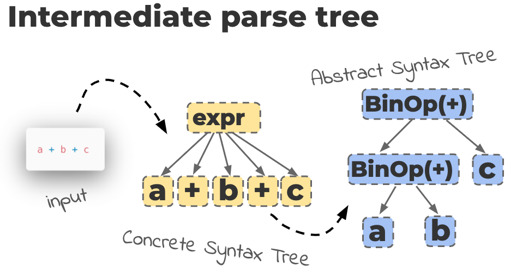

2.8. Abstract Syntax Tree (AST)

We don’t reason about our code in concepts of the characters or text of the source code, but rather by the abstract meaning that defines the operations. This is also how the code execution operates.

In order to go from written source code (text) to a program that is executing the instructions on the CPU, the code has to be parsed, broken down to a hierarchical structure that the compiler can analyze, optimize and execute it.

2.8.1. Building an AST

How this is done is implementation dependent. For many language implementations, including Python (CPython) the process is:

- Source code is read and parsed into a Parse Tree

- The parse tree is transformed to an Abstract Syntax Tree (as of Python 2.6) that is a cleaner representation than the parse tree, e.g. it removes comments (but still retains references to line number)

- The AST is transformed to a Control Flow (directed) Graph (CFG), that is then converted to byte code that can be executed and yield an output.

Figure 7: src

2.8.2. Python Example

The AST of a Python program can be accessed through the ast module, e.g.

we can expand it from the command line, for given any Python file:

python -m ast <source.py>

For example, the following source code:

import ast print(ast.dump(ast.parse("(1+2)*3", mode="eval"), indent=4))

yields a hierarchy for suitable for parsing and manipulation (note: structure changes depending on version):

Expression(

body=BinOp(

left=BinOp(

left=Constant(value=1),

op=Add(),

right=Constant(value=2)),

op=Mult(),

right=Constant(value=3)))

2.8.3. What’s the use of the AST module?

This is used, by several tools that need to parse the Python syntax:

- Lint checkers, like

flake8, andblack, as does - IDE’s syntax navigation & highlighting

Thus we could manipulate the code through the AST module as described in Intro to Python ast Module.

2.8.4. A foreboding

As we shall see, the AST of Lisp is the same as the source code itself. The equivalent source code:

(* (+ 1 2) 3)

will have an AST, that is itself a list, which is the basic type of the language:

(* (+ 1 2) 3)

(See Peter Norvig point 11 on macros and source parsing).

3. Lisp Introduction

The big revelation to me when I was in graduate school – when I finally understood that the half page of code on the bottom of page 13 of the Lisp 1.5 manual was Lisp in itself. These were “Maxwell’s Equations of Software!” This is the whole world of programming in a few lines that I can put my hand over.

Alan Kay - A Conversation with Alan Kay

We shall use the Lisp programming language to demonstrate several important key features missing from Python, which are relevant to know for use in Mojo, and when properly wielded shall grant its practitioners super powers.

For those who want to play with Lisp, there’s online interpreter:

3.1. Why learn Lisp?

LISP is worth learning for a different reason — the profound enlightenment experience you will have when you finally get it. That experience will make you a better programmer for the rest of your days, even if you never actually use LISP itself a lot.

Eric Raymond - How to become a hacker

This is the same argument you tend to hear for learning Latin. It won’t get you a job, except perhaps as a classics professor, but it will improve your mind, and make you a better writer in languages you do want to use, like English.

Paul Graham - Beating the Averages

The main benefits of Lisp is the experience of using it. We have (perhaps cheekily) argued that all programming languages converge towards Lisp, being the “highest” language in the spectrum of power and expressiveness. Thus, from learning Lisp, we can not only glean insights into the future of programming, but it will also enable feats not possible in any other languages.

Due to its expressive power, one can abstract more with fewer lines of code, leading to significant faster development time. The following is some user testimonies:

3.1.1. ITA testimony

Google’s Airfare search, by ITA:

- ITA President: one line of Lisp can replace 20 lines of C

- Often said 7-10x less code

- 200k lines of Common Lisp, plus C & C++ code

how much shorter are your programs if you write them in Lisp? Most of the numbers I’ve heard for Lisp versus C, for example, have been around 7-10x. But a recent article about ITA in New Architect magazine said that “one line of Lisp can replace 20 lines of C” and since this article was full of quotes from ITA’s president, I assume they got this number from ITA. If so then we can put some faith in it; ITA’s software includes a lot of C and C++ as well as Lisp, so they are speaking from experience.

Paul Graham - Carl de Marcken of ITA Software

The core of ITA’s application is a 200,000 line Common Lisp program that searches many orders of magnitude more possibilities than their competitors, who apparently are still using mainframe-era programming techniques.

Paul Graham - Revenge of the Nerds

3.1.2. Lisp is faster both to run and to develop

…asked 38 programmers to implement versions of a program in C, C++, or Java [or Lisp]. Lisp programs ran faster on average than C, C++ or Java programs (although the fastest Lisp program was not as fast as the fastest C program), and that Lisp programs took less development time than the other languages.

Peter Norvig - Lisp as an Alternative to Java

3.1.3. Lisp at NASA

I think it’s safe to say that if not for Lisp the Remote Agent would have failed.

Ron Garret - Lisping at JPL

- “Remote control Agent” ran on Deep Space 1

- Competing team failed to re-write it in C++

- The Remote Agent Experiment: Debugging Code from 60 Million Miles Away, Google talk, 2012-02-14 (vid)

3.1.4. ViaWeb success story

ViaWeb allowed end users to build web stores, directly in their browser.

- Could add new features faster than any of their 20-30 competitor

- Could add things competitors could not do

- Could be done using a smaller development team than competitor

- Yahoo Store, still one of Yahoo’s most profitable parts

- Source code was 20-25% macros

If you can use any language, which do you use? We chose Lisp. For one thing, it was obvious that rapid development would be important in this market. We were all starting from scratch, so a company that could get new features done before its competitors would have a big advantage. We knew Lisp was a really good language for writing software quickly, and server-based applications magnify the effect of rapid development, because you can release software the minute it’s done.

…

Our hypothesis was that if we wrote our software in Lisp, we’d be able to get features done faster than our competitors, and also to do things in our software that they couldn’t do. And because Lisp was so high-level, we wouldn’t need a big development team, so our costs would be lower.

Somewhat surprisingly, it worked. We eventually had many competitors, on the order of twenty to thirty of them, but none of their software could compete with ours. We had a wysiwyg online store builder that ran on the server and yet felt like a desktop application. Our competitors had cgi scripts. And we were always far ahead of them in features. Sometimes, in desperation, competitors would try to introduce features that we didn’t have. But with Lisp our development cycle was so fast that we could sometimes duplicate a new feature within a day or two of a competitor announcing it in a press release. By the time journalists covering the press release got round to calling us, we would have the new feature too.

…

The source code of the Viaweb editor was probably about 20-25% macros.

Paul Graham - Beating the Averages (details)

3.1.5. Lex & Cyc

Douglas Lenat is CEO of Cycorp, a 37 year project aiming to solve common-sense knowledge and reasoning in AI. He said:

Development of programs in Lisp precedes 1k - 50k faster than development in “modern” or “improved” programming languages.

Douglas Lenat - LISP: Lex Fridman’s favorite programming language

3.1.6. Lisp amplifies your power

Lisp, as a tool, is to the mind as the lever is to the arm. It amplifies your power and enables you to embark on projects beyond the scope of lesser languages like C. Writing in C is like building a mosaic out of lentils using a tweezer and glue. Lisp is like wielding an air gun with power and precision. It opens up whole kingdoms shut to other programmers.

Dr. Mark Tarver - The Bipolar Lisp Programmer

3.1.7. Lisp - The Machine that Builds Itself

In the paper “The Machine that Builds Itself: How the Strengths of Lisp Family Languages Facilitate Building Complex and Flexible Bioinformatic Models”

- Ideal for Artificial Programming

these features make these languages ideal tools for artificial intelligence and machine learning applications. We will specifically stress the advantages of domain-specific languages (DSL): languages which are specialized to a particular area and thus not only facilitate easier research problem formulation, but also aid in the establishment of standards and best programming practices as applied to the specific research field at hand. DSLs are particularly easy to build in Common Lisp, the most comprehensive Lisp dialect, which is commonly referred to as the “programmable programming language.” We are convinced that Lisp grants programmers unprecedented power to build increasingly sophisticated artificial intelligence systems that may ultimately transform machine learning and AI research in bioinformatics and computational biology.

The strengths of Lisp facilitate building complex and flexible applications Briefings in Bioinformatics, Volume 19, Issue 3, May 2018, Pages 537–543

3.1.8. Common usages of Lisp:

Figure 8: src

- AutoCad (2D & 3D Computer Aided Design)

- Maxima (computer algebra system)

- Emacs (Editor MACroS)

- Guile (a Scheme implementation), default script language of GNU/Linux OS

- GIMP script (Scheme, Python)

- gdb (GNU debugger)

- GNU Guix (package manager)

- Jak and Daxter, Naughty Dog’s game for the PlayStation 2, which is largely written in a domain-specific Lisp dialect Naughty Dog invented called GOAL, whose compiler is itself written in Common Lisp. (See PCL).

- Hacker News

- Viaweb (by Paul Graham, now Yahoo Store)

- Mirai 3D Graphics (animated face of Gollum in LotR)

- Grammarly (the core grammar engine)

- Google Flights, Bing Flights, etc through: ITA Software’s low fare search engine, used by travel websites such as Orbitz and Kayak.com and airlines such as American Airlines, Continental Airlines and US Airways. (wikipedia)

- Cyc (AI platform)

- DART (Dynamic Analysis and Replanning Tool), is an AI program used by the U.S. military to optimize and schedule the transportation of supplies or personnel and solve other logistical problems. is said to alone have paid back during the years from 1991 to 1995 for all thirty years of DARPA investments in AI research.)

- NASA’s Remote Agent, for autonomous spacecraft control system, (Lisping

at JPL) used in:

Deep Space 1 probe (1998)

Remote Agent controlled DS1 for two days in May of 1999. During that time we were able to debug and fix a race condition that had not shown up during ground testing. (Debugging a program running on a $100M piece of hardware that is 100 million miles away is an interesting experience. Having a read-eval-print loop running on the spacecraft proved invaluable in finding and fixing the problem.

NASA’s Pathfinder mission: (podcast)

At the time it was more or less taken for granted that AI work was done in Lisp. C++ barely existed. Perl was brand new. Java was years away. Spacecraft were mostly programmed in assembler, or, if you were really being radical, Ada.

- Health diagnostics in Voyager

3.2. Introduction to Lisp

Lisp, upon its discovery in 1958, embodies 9 main ideas, outlined in What made Lisp different, that made it the defacto language for AI programming in USA up until the 1990s, (although Europe and Japan also used Prolog, as noted by Norvig/PAIP). Lisp has strong merits as a fundamental programming language:

In Lisp, the code itself is represented as lists, which is the fundamental data structure. It is argued that it is better to have 100 functions operate on one data structure than 10 functions on 10 data structures (although lisp has vectors, hash tables, associated lists (what Python calls “dictionaries”), and other data structures as well).

3.2.1. Prefix notation

Lisp uses prefix notation, where operator is the first symbol in the list, followed by the operands.

(<operator> <operand>)

E.g.

(+ 1 2 3) ; 1 + 2 + 3 = 6 (+ 1 2 (* 3 4 5) 6) ; 1 + 2 + (3 * 4 * 5) + 6 = 69 (= (+ 2 2) (* 2 2)) ; t (for "True")

Naming convention in Lisp, possible due to prefix notation:

not-camel-case- function & variable names are hyphenated.

+constant+- constants usually wrapped in

+character. *global*- global variable usually wrapped in

*character. predicate?- name of functions that return Boolean, end in

?. set!- name of function that changes state, ends in

!. int->float- method name for converting.

3.2.2. Compound data - cons & list

We have seen how to write code (adding numbers). But how do we represent

data? For that we have a primitive procedure that builds compound data:

cons, (also called pair in Clojure). It “glues” two arguments and

returns a compound data object that contains the two arguments as parts.

The parts are retrieved using car and cdr (alias: first, rest in

Common Lisp).

(cons 1 2) ;; => (1 . 2) (define x (cons 1 2)) (car x) ;; => 1 (cdr x) ;; => 2

cons- Construct

car- Contents of address part of register (architecture of IBM 704)

cdr- Contents of decrement part of register (architecture of IBM 704)

A list is a nested chain of cons, where each leaf is an atom, terminated

by NIL (in Common Lisp) or empty list '() (in Scheme). A list

constructs a chain of cons cells, where last element in the pair points

to the next pair.

Common Lisp:

(cons 1 (const 2 (cons 3 (cons 4 NIL)))) ;; -> (1 2 3 4) (list 1 2 3 4) ;; -> (1 2 3 4)

Scheme:

(cons 1 (const 2 (cons 3 (cons 4 '())))) ;; -> (1 2 3 4) (list 1 2 3 4) ;; -> (1 2 3 4)

Figure 9: SICP fig 2.4, chain of cons

We can represent the chained cons cells as:

(a . (b . (c . (d . NIL))))

By convention, if the cdr is another cell, we drop the ., and the last

cell if it is NIL, and just write it:

(a b c d)

All code in Lisp is written as lists, thus everything is a binary tree.

E.g. consider this piece of code that adds 1 to each element (leaf) in the

list my-list:

(mapcar (lambda (x) (+ x 1)) my-list)

can be written out in its full, un-abbreviated, lisp:

(mapcar . ((lambda . ((x . NIL) . ((+ . (x . (1 . NIL))) . NIL))) . (my-list . NIL)))

i.e. everything is just cons cells, terminated in atoms, forming a binary tree full of code, that can be manipulated.

You can write macros that take this tree, as a data structure, and do whatever on earth they want with it. The abstract syntax tree of your Lisp program isn’t some opaque construct internal to the language; it’s just a tree: an incredibly simple data structure that you already use in everyday programming anyway

From Stackoverflow

Figure 10: Classic Lisp bumper sticker (src)

3.2.3. Parenthesis paralysis?

3.2.4. Everything is an expression

Every expression returns a value, (thus Alan Perils paraphrasing Oscar Wilde Lisp programmers know the value of everything, but the cost of nothing, because early Lisp implementations were slow).

Operands are evaluated before being sent to the function, here first multiply 3 with 4, then add result of that expression’s return value to addition with 1 and 2.

(+ 1 2 (* 3 4))

In Python, a statement is a piece of code that does something. A subset of statements are expressions, namely those that are a collection of symbols that jointly express a quantity. Expressions and are made up of atoms, i.e.:

- identifiers

- e.g. variable & function names

- literals

- e.g. numbers, floats, strings

- operators

- e.g.

*,+,(),[]…

(See stackoverflow for more)

3.2.5. Special forms - define

Lisp has a handful of special forms, where arguments are not evaluated

prior to being passed to the first symbol in the list. One example is

define, which binds values variables:

;; set vaue of 'x' to 3, and return 3 (define x 3) ;; set value of 'square' to lambda function requiring one argument (define square (lambda (x) (* x x))) ;; "syntatic sugar", allows us to re-write above: (define (square x) "Return input multiplied with itself." (* x x)) (square 3)

Note: a function is nothing more than a λ-function (from Alonzo Church, 1941) bound to a variable. Syntax for λ (Scheme)

(lambda <list-of-parameter> <list-of-code>)

(See appendix for resources on Lambda calculus in Lisp)

3.2.6. Special Form - if

Consider the if form:

(if <condition> <run-if-true> <run-if-false>)

If the if statement was a function, it would all of its

arguments. Instead, it is a special form, that will evaluate the second

argument if the first evacuates to true, else the third.

(Scheme has 5 special forms: quote, if, begin (like progn), set!

(like setq), and lambda. The define symbol is a macro).

3.2.7. Quotation - make code data

If we want to assign a list of “1 2 3”, to variable x we can not say

(define x (1 2 3)) ;; error! Trying to call 1 with input 2 & 3

Since code and data look the same, we distinguish the two by using

quotation. The primitive function quote returns its input:

(quote (1 2 3)) ;; => (1 2 3) ;; or short form ("syntactic sugar"): '(1 2 3) ;; => (1 2 3)

Similar, if we want to convert the addition example to data:

'(+ 1 2 3) ;; => (+ 1 2 3), not 6 (+ 1 2 3) ;; => 6

Similarly, we can construct a list, by using the list function:

(list 1 2 3 (+ 2 2)) ;; => (1 2 3 4)

Difference with quotation, is here it evaluates each input argument before constructing the list.

'(1 2 3 (+ 2 2)) ;; => '(1 2 3 (+ 2 2))

3.3. Recursion vs iteration

Lisp was the first language to introduce recursion. Furthermore, Scheme is

guaranteed to be tail recursive, meaning it needs no looping constructs

(“for, do, while”), like Pascal, C/C++, etc. where recursive function

calls will grow memory consumption with number of calls, even if the

process is iterative, thus the need for a special looping construct. Scheme

does not have this defect, as is demonstrated in the following.

3.3.1. Example: factorial function in Python

Consider computing the factorial function: \[ n! = n \cdot (n-1) \cdot (n-2) \cdots 2 \cdot 1 \]

This would probably be solved iteratively in Python by using the for

looping construct:

def factorial(n): "Iterate" prod = 1 for i in range(n): prod *= i + 1 return prod

3.3.2. Example: factorial recursive function in Python

However, we note that the factorial can also be re-written as:

\begin{equation*} n! = n \cdot (n-1)! \end{equation*}which results in the following recursive function in Python:

def factorial(n): "recursive" if n == 1: return 1 else: return n * factorial(n - 1) print(factorial(1000)) # => RecursionError, for Python 3.11

However, due to implementation limitation in CPython, this will quickly

throw a RecursionError: maximum recursion depth exceeded in comparison,

usually the recursion limit is 1000 in Python. Recursion is thus dissuaded

in Python, even before you risk getting a stackoverflow, due to expanding

memory consumption.

import sys print(sys.getrecursionlimit()) sys.setrecursionlimit(1500)

3.3.3. Example: factorial recursive function in Lisp recursive process

The equivalent code in Lisp (Scheme) is:

(define (factorial n) (if (= n 1) 1 (* n (factorial (- n 1)))))

which expands to a linear recursive process, e.g. for computing \(5!\):

(factorial 5) (* 5 (factorial 4)) (* 5 (* 4 (factorial 3))) (* 5 (* 4 (* 3 (factorial 2)))) (* 5 (* 4 (* 3 (* 2 (factorial 1))))) (* 5 (* 4 (* 3 (* 2 1)))) (* 5 (* 4 (* 3 2))) (* 5 (* 4 6)) (* 5 24) 120

This expansion will consume more memory, until it reaches the end of the recursion.

3.3.4. Example: factorial recursive function in Lisp iterative process

If the language supports tail recursion, we can re-write the function to still be recursive, but be iterative in its process:

(define (factorial n) (define (iter product counter) (if (> counter n) product ;; if true, return product (iter (* counter product) ;; else, call again, with count * prod (+ counter 1)))) ;; ...and increment counter++ (iter 1 1))

which will run as a linear iterative process, demonstrated here for computing \(5!\)

(factorial 5) (fact-iter 1 1 5) (fact-iter 1 2 5) (fact-iter 2 3 5) (fact-iter 6 4 5) (fact-iter 24 5 5) (fact-iter 120 6 5) 120

3.3.5. Example: factorial recursive function in Python iterative process

Identical implementation, but in Python. There is no reason this should

fail for n > 900.

def factorial(n): def iterate(product, counter): if counter > n: return product else: return iterate(counter * product, counter+1) return iterate(1,1) print(factorial(1000))

3.3.6. Generate code as data

Consider the recursive function from before. By simply quoting the

multiplication operator * and having it return a list, we now have a

function that generates the code, rather than the return value:

(define (factorial n) (if (= n 1) 1 (list '* n (factorial (- n 1)))))

Evaluated this returns:

scheme> (factorial 5) (* 5 (* 4 (* 3 (* 2 1)))) scheme> (eval (factorial 5)) 120

(Note: In GNU Guile Scheme we also need to pass interaction environment)

scheme@guile> (eval (factorial 5) (interaction-environment))

Now consider how easy we could move from evaluating code, to generating data that can be evaluated as code, by writing a function that generates code.

If we compare to Python, data and the language code are very different:

- data

- lists defined by

[], dicts by{}, tuples by() - code

- language defined by e.g.

def,class,if, etc.

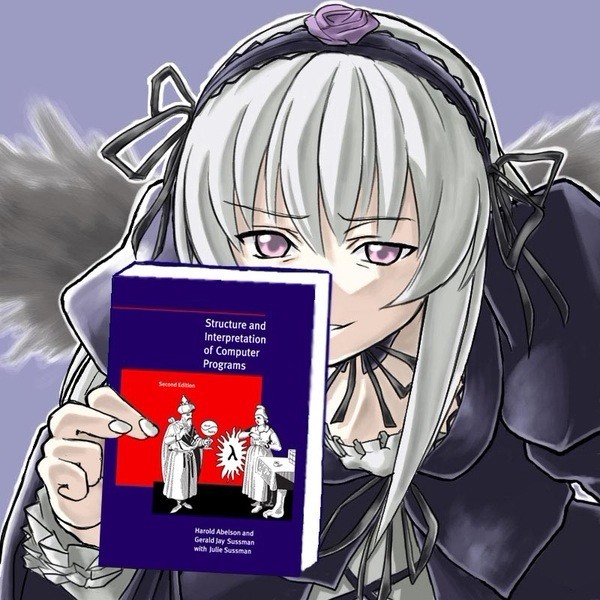

3.3.7. More: SICP - Structure and Interpretation of Computer Languages

Figure 12: (See src for +80 more sicp+anime images…)

I know that due to my work, I’ll still use Python for most projects, but these revelations have changed the way I think about my Python code, too.

Behnam Mohammadi (PhD student) - It’s Lambdas All the Way Down

The previous example was from the book Structure and Interpretation of Computer Languages (chapter 1.2.1), by Abelson, & Sussman. SICP is a true classics of computer science, and is still used in universities for first year computer science, e.g. at University of Oslo (2023) and Berkeley (CS61A archive), and considered a “must read” for those interested in programming, and the #1 most recommended book on hacker news.

There’s pdf, a nice html rendering and interactive html, lecture videos by authors (youtube), solutions, study guide, and blogs. In Racket there’s SICP compatibility mode. Although there is both a JavaScript version and a Python (somewhat) inspired version of the book, these versions are dissuaded:

SICP and Scheme go together like horse and carriage, and neither Python, JavaScript or other “real world” languages should taint the insight gained from that wonderful pairing. Because Scheme - being a LISP - has the same syntax for code and data, metalinguistic abstraction is facilitated.

/Hacker news

Figure 13: src

Abelson and Sussman (1983) is probably the best introduction to computer science ever written.

Peter Norvig - PAIP (p 777)

(Addendum: Although Lisp has had macros since mid 1960s, SICP does not cover macros, as they were only included in Scheme with R4RS in 1988).

3.4. Functions as first class citizens

Programming languages impose restrictions on the ways in which computational elements can be manipulated. Elements with the fewest restrictions are said to have first-class status, supporting all operations generally available to the language:

- can be named by variables

- can be passed as arguments to functions

- can be returned as result of functions

- may be included in data structures.

3.4.1. Example: Average dampening Lisp

In Lisp (and Python), functions are first-class citizens. As an example of

use, consider \(\sqrt(x)\) is a fixed point (i.e. x = f(x)) to

or more general: given a function f consider a function whose value at

x is equal to the average of x and f(x), i.e. need to both pass

function as argument and as return value:

(define (square x) (* x x)) (define (average a b) (/ (+ a b) 2.0)) (define (average-damp f) (lambda (x) (average x (f x)))) ((average-damp square) 3) ;; => 6

3.4.2. Example: Average dampening Python

Which we can similarly be written in Python:

def square(x): return x*x def average(a, b): return (a + b) / 2.0 def average_damp(f): return lambda x: average(x, f(x)) print(average_damp(square)(3)) # => 6

Here function f is the input argument, and return a function to be

applied to x to yield the average of x and f(x).

Although functions are first class citizens in Python, the main language implementation imposes limits on how many times we are allowed to use it (see previous section on recursion).

(For more, see “Procedures as return values” in (SICP 1.3.4)).

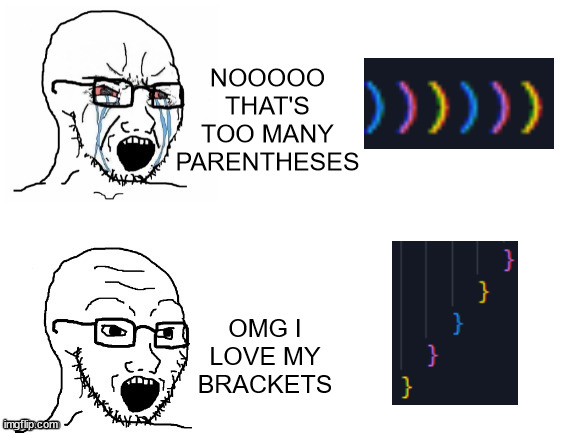

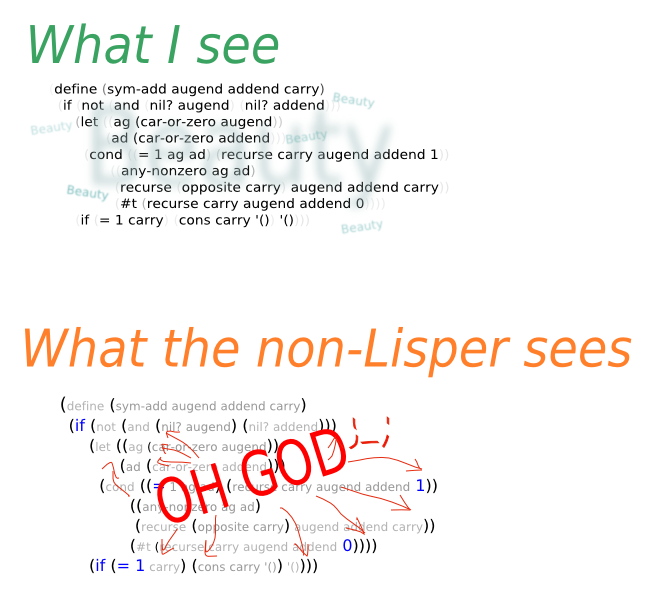

3.5. On the peculiarity of Lisp syntax

Figure 14: src

Lisp syntax is connected to its expressive power. Since Lisp’s syntax is uniform:

(<operator> <arg1> <arg2> ... <argn>)

it will, as we shall see, allow macros to operate on everything, without having to write an AST-manipulating program (like OCaml’s PPX). Paul Graham notes in Beating the Averages that:

is not so much that Lisp has a strange syntax as that Lisp has no syntax You write programs in the parse trees that get generated within the compiler when other languages are parsed

or in full:

Lisp code is made out of Lisp data objects. And not in the trivial sense that the source files contain characters, and strings are one of the data types supported by the language. Lisp code, after it’s read by the parser, is made of data structures that you can traverse.

If you understand how compilers work, what’s really going on is not so much that Lisp has a strange syntax as that Lisp has no syntax. You write programs in the parse trees that get generated within the compiler when other languages are parsed. But these parse trees are fully accessible to your programs. You can write programs that manipulate them. In Lisp, these programs are called macros. They are programs that write programs.

3.5.1. Same meta-syntax

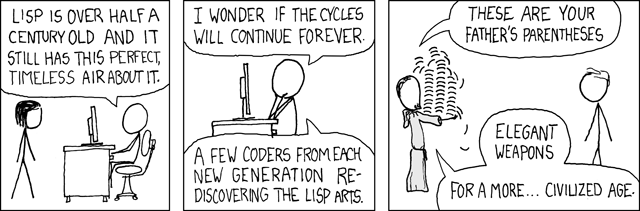

Figure 15: xkcd.com/297

Although there are different rules for how to combine symbols and numbers to form a semantically meaningful program, such as:

;; variable binding (define pi 3.14) ;; function definition (define (square x) (* x x)) ;; Conditional (short-circuiting) (if (> pi 3) 1 0) ;; Switch/case (equivalent to Ptyhon: if-elif-elif...-else) (case (* 2 3) ((2 3 5 7) 'prime) ((1 4 6 8 9) 'composite)) ;; => composite

demonstrating seemingly different syntax, they all share the same meta-syntax. We can think of it as:

- meta-syntax

- the parenthesis

- semantic syntax

grammar of symbols and lists

<s-expr> := <atom> | ( <s-expr>* ) <atom> := <symbol> | <number> | ...

where an s-expression is either an atom (symbol or number), or a

combination of other s-expressions.

(Above, based on discussion from Hacker News).

3.5.2. Python and Lisp syntax not so different

Although Python does not have the uniform syntax of Lisp, they both share having a controversial meta-syntax:

- Python

- block indentation

- Lisp

- parenthesis

Peter Norvig notes in his article Python for Lisp programmers, “For Python (Lisp) some people have initial resistance to the indentation as block structure (parentheses), most come to accept (deeply appreciate) them.”

Furthermore, in both languages, this meta-syntax leads to uniform code formatting:

One of Python’s controversial features, using indentation level rather than begin/end or braces, was driven by this philosophy: since there are no braces, there are no style wars over where to put the braces. Interestingly, Lisp has exactly the same philosphy on this point: everyone uses emacs to indent their code, so they don’t argue over the indentation. Take a Lisp program, indent it properly, and delete the opening parens at the start of lines and their matching close parens, and you end up with something that looks rather like a Python program.

Peter Norvig - Python for Lisp programmers

3.6. Lisp speed compared

| Test | Lisp | Java | Python 3 | Perl | C++ |

|---|---|---|---|---|---|

| hash access | 1.06 | 3.23 | 4.01 | 1.85 | 1.00 |

| exception handling | 0.01 | 0.90 | 1.54 | 1.73 | 1.00 |

| sum numbers from file | 7.54 | 2.63 | 8.34 | 2.49 | 1.00 |

| reverse lines | 1.61 | 1.22 | 1.38 | 1.25 | 1.00 |

| matrix multiplication | 3.30 | 8.90 | 278.00 | 226.00 | 1.00 |

| heapsort | 1.67 | 7.00 | 84.42 | 75.67 | 1.00 |

| array access | 1.75 | 6.83 | 141.08 | 127.25 | 1.00 |

| list processing | 0.93 | 20.47 | 20.33 | 11.27 | 1.00 |

| object instantiation | 1.32 | 2.39 | 49.11 | 89.21 | 1.00 |

| word count | 0.73 | 4.61 | 2.57 | 1.64 | 1.00 |

| Median | 1.67 | 4.61 | 20.33 | 11.27 | 1 |

| 25% to 75% | 0.93 to 1.67 | 2.63 to 7.00 | 2.57 to 84.42 | 1.73 to 89.21 | 1 to 1 |

| Range | 0.01 to 7.54 | 0.90 to 20.47 | 1.38 to 278 | 1.25 to 226 | 1 to 1 |

- Why is pgloader so much faster?

pgloader, for loading data into PostgreSQL is 30x faster when re-written from Python to Common Lisp. - How to make Lisp go faster than C, (pdf, Didier Verna, 2006).

CL-PPCRE, a regular expression library, written in Common Lisp, runs faster than Perl’s regular expression engine on some benchmarks, even though Perl’s engine is written in highly tuned C

The Machine that builds itself

Compiler macros can transform function calls into more efficient code at runtime, and a mandatory disassembler which enables programmers to fine-tune time-critical functions until the compiled code matches their expectations.

The Machine that builds itself

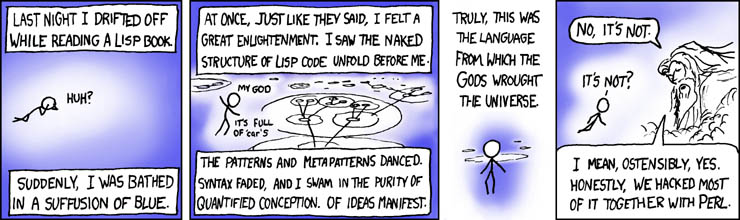

4. Lisp Family History

Figure 16: xkcd.com/224

In 1960, John McCarthy published a remarkable paper in which he did for programming something like what Euclid did for geometry. He showed how, given a handful of simple operators and a notation for functions, you can build a whole programming language.

[…]

It’s not something that McCarthy designed so much as something he discovered.

Paul Graham - The Roots of Lisp

4.1. Introduction

Languages must evolve or die.

- Python evolves through major & minor versions: 1 -> 2 -> 3.0 -> 3.1 …

- C++ evolves through updated standards C++11 -> C++14 -> C++17

- Lisp evolves by dialects, forming a family of Lisp languages/dialects.

Where Python only has one (main standard) implementation (CPython), each Lisp dialect typically have many different implementations (both interpreters and compilers), similarly to how C++ has many different compilers (gcc, clang/LLVM, intel, mvcc, Borland).

As noted in PAIP: programming languages come and go as new styles of programming are invented, Lisp just incorporates new styles as macros (e.g. For-loop, CLOS), possible since programs are just simple data structure (list).

Figure 17: wikipedia

Overall, the evolution of Lisp has been guided more by institutional rivalry, one-upsmanship, and the glee born of technical cleverness that is characteristic of the \hacker culture“ than by sober assessments of technical requirements. Nevertheless this process has eventually produced both an industrial- strength programming language, messy but powerful, and a technically pure dialect, small but powerful, that is suitable for use by programming-language theoreticians.

4.2. Common Lisp (1984- ANSI 1994-)

“We were after the C++ programmers. We managed to drag a lot of them about halfway to Lisp.”

- Guy Steele, co-author of the Java spec

Paul Graham - Revenge of the Nerds

Key points of Common Lisp:

- Industrial

- Unification of many previous Lisp dialects (MacLisp and it’s successors).

- Standard Lisp dialect for large scale software projects

- Large & comprehensive (700 functions), advanced,

ANSI Common Lisp is a language specification, of which there are many implementations. Some popular:

- SBCL (Steel Bank Common Lisp), fork from CMUCL, fast, popular, well maintained & developed.

- Allegro Common Lisp

- LispWorks

- Clasp (LLVM, seamlessly interoperates with C++)

- CMUCL

- GNU Common Lisp (not fully ANSI), used by mathematical tools like Maxima & AXIOM.

(Most popular IDE for CL is Emacs, e.g. see this for minimal setup, or Lem, which is written in Common Lisp).

4.2.1. Lisp-2 vs Lisp-1

One of the distinct features of Common Lisp is that it has different name

spaces for functions and variables (defun & defvar), which

colloquially called “Lisp-2”. This also means we need to have a special

function-quote operator #.

Example of some “Lisp-2” languages, aka “multi-value-name”:

- Common Lisp

- Emacs Lisp

- Ruby

- Perl

Example of some “Lisp-1” languages, aka “single-value-name”:

- Scheme

- Clojure

- Python

- C, C++

For more info on Lisp1 vs Lisp2, and tradeoffs see Technical Issues of Separation in Function Cells and Value Cells and Christian Queinnec’s book, “Lisp in Small Pieces”.

4.3. Scheme (1975-)

Scheme has an outsized importance in the world of programming languages compared to its actual use in industry, because it has so often pioneered features that later broke into the mainstream.

Key points of Scheme:

- Academic,

- Minimal & clean

- For teaching and research & experimentation with programming language design

- Used in SICP

- Developed by committee releasing implementation standards “R^nRS” (“Revised n Report on the Algorithmic Language Scheme” ).

Some notable implementations:

- Racket, user friendly, comes with its own IDE (DrRacket)

- Chez Scheme (fastest),

- GNU Guile, preferred extension language for the GNU Project

- Chicken, compiles to C, portable, extensible

- Gambit, compiles to C, “unique blend of speed, portability and power”

- Chibi, minimal, for extension

- Kawa, runs on JVM,

- MIT/GNU Scheme, by Guy Steele & Gerald Jay Sussman

Let me repeat here what a radical decision it was to go and build a Scheme. The only Scheme implementation that had ever been built at this point was the research prototype Steele had done for his Masters. All serious Lisps in production use at that time were dynamically scoped. No one who hadn’t carefully read the Rabbit thesis believed lexical scope would fly; even the few people who had read it were taking a bit of a leap of faith that this was going to work in serious production use – the difference between theory & practice is, uh, larger in practice than in theory.

Olin Shivers: History of T

For some implementation benchmarks, see:

Interestingly, the statistical programming language R, is written on top of

Scheme, and makes use directly from Lisp to use macros for creating Domain

Specific Language (DSL) e.g. for ggplot2, dplyr and plyr.

As a side-note: this blog post talks about different implementations of Scheme, and its use for games programming, and on embedding:

S7 is an example of an embeddable Scheme. Guile is also used for extending C programs, though typically that involves dynamically linking to libguile rather than embedding the interpreter into the program’s executable. Fennel takes a different approach, recognizing that there are many existing applications that are already extensible through Lua, and provides a lispy language that compiles to Lua. …. The initial vision for Guile was to Emacsify other programs by adding a Scheme interpreter to them. These days, the best practice is to write your program in Scheme to begin with. Common Lisp is probably the best example, though. Implementations like SBCL have good C FFIs and can compile efficient native executables, minimizing the desire to use some C for performance reasons.

4.4. Clojure

- Modern, compared to other Lisps (e.g. case sensitive, Lisp-1)

- Runs on JVM

- ClojureScript - compiles Clojure to JavaScript

For more, see:

- clojure-by-example - online documentation

- clojure for the brave and true - book

- Maria, a coding environment for beginners - tutorial

4.5. Misc

- elisp (aka Emacs Lisp) - Scripting language for GNU Emacs (and XEmacs)

uLisp - Lisp for microcontrollers

Lisp for Arduino, Adafruit M0/M4, Micro:bit, ESP8266/32, RISC-V, and Teensy 4.x boards.

PetaLisp - for extreme parallelization / high performance computing

Attempt to generate high performance code for parallel computers by JIT-compiling array definitions. It is not a full blown programming language, but rather a carefully crafted extension of Common Lisp that allows for extreme optimization and parallelization.

PicoLisp (docs) - (1 MB) Focus on data, rather than code. Three data structures (nubmer, symbol, cons), all implemented as the internal single data type “cell”.

In programming, the ultimate purpose is to manipulate data, not functions. Code and data are equivalent in Lisp, so you get the function manipulations for free anyway, but the focus should be on data.

Hy / Hylang (embedded in Python as alternate syntax for Python)

Compared to Python, Hy offers a variety of extra features, generalizations, and syntactic simplifications, as would be expected of a Lisp.

hissp Scheme like & minimalist, full access to Python’s 3.8+ ecosystem

It’s Python with a Lissp.

- Arc - (By Paul Graham) runs Hacker News web forum.

- Franz Lisp - Very popular in 70s & 80s. Name is pun on “Franz Liszt”. Discontinued.

- Fennel Lisp that compiles to Lua.

5. Metaprogramming

Every other language on the planet has only one dimension of abstraction (functions/procedures/objects). Lisp adds a second dimension of abstraction: syntactic abstraction. The resulting expressiveness takes the abstraction level of the code to an entirely different level.

Proficient Lispers invent sub-languages for the simplest of domains and their programs are a very elegant collection of very high-level abstractions created in special purpose languages (aka DSL: domain specific languages). In my experience, this is not true in any other language.

(quora)

As we shall see, being able to write program that writes programs, allows for elegant solutions to problems, not easily accessible in languages. A program that can modify itself while running, and perform computation at compile time, rather than run time.

5.1. History

Metaprogramming was especially popular in the field of Artificial Intelligence, during 1970s & 80s, using Lisp. Since the language is itself a first-class data type, this makes it the quintessential language for metaprogramming. Wikipedia calls this homoiconicity, though that definition is a misnomer. Better to say: in Lisp the external and internal representation of the language (on which the virtual machine operates) is the same (or very close).

For comparison, Python is not homoiconic, as the external representation is the text file, and the internal is the bytecode on which the interpreter operates, while in Lisp both representations are s-expressions (at least in theory, in practice, it depends on implementation details). Likewise, C is not/less homoiconic, as the external representation is the program text, and the internal is machine code.

Although many languages do offer possibility for metaprogramming, or manipulating the Abstract Syntax Tree, we shall see that this is strongly dissuaded in all but a few languages.

Update , A blog post demonstrating Lisp using Python: Homoiconic Python - McCarthy’s Lisp in Python lists

5.2. Python Metaprogramming

Most Python programmers rarely, if ever, have to think about metaclasses.

Python provides metaclasses, which is a form of meta programming, that can

dynamically re-program classes, through access to a dict of methods and

attributes of the class. However, unless you know what you are doing,

you are dissuade from using it. Example of its use case is e.g. how

sklearn transformer can enforce that each inheriting class implements a

fit() and transform() function).

Use cases (src):

- Check that class was defined correctly.

- Check that every module in our framework follows naming convention for methods/attributes.

- Raise errors during module import.

- Each time a base class is subclassed, we can run a specific code using metaclass.

- We often use special methods and metaclasses to change the way Python objects work. We can even extend Python syntax by creating our own domain-specific language (DSL) using the abstract syntax tree (AST).

Technically, one could construct string of valid Python code, and use eval:

x = 1 y = eval('x + 1') # -> y = 2 # typical use: eval(input())

5.3. C macros

C has something called pre-processing macros (lines starting with #) that

do text substitution (simple search & replace) before compilation. For

example:

(The word “macro” in computer science, comes from assembly programming’s macro-instructions, which looks like a single instruction, but expands into a sequel of instructions.)

5.3.1. Substitution

Simple text search-and-replace, prior to compile time, for constants:

#define PI 3.1415 #define SIZE 32 float foo(){ float tau = 2 * PI; int array[SIZE]; /* array holding SIZE integers */ return tau; }

5.3.2. Conditional statements

Conditional statements, for which version of code should be used, depending on the platform it is being compiled on:

#ifdef __linux__ /* do some linux specific stuff */ #elif __APPLE__ /* do some apple specific stuff */ #endif

5.3.3. The bad

- Pre-processor uses different syntax than

C - Does not have access to the parser of the programming language, i.e. it knows nothing of the C programming language.

5.4. C++ templates

C++ template programming is to Lisp macros what IRS tax forms are to poetry

Christian Stafmeister (2015, Google Tech Talk)

In C++ (and Mojo) we specify the input type of the parameters, and can have functions with same name, but different implementation, depending on the input type:

// square function for integers int square(int x){ return x*x; } // square function for float precision float square(float x){ return x*x; }

Template system acts as a blueprint for the compiler to generate the code for overloaded functions, for only the types we use, at read time:

// Generate code for square function for each type we need: template<typename T> T square(T x){ return x*x; } float a = 3.0f; float aa = square(a); // implicit float bb = square<float>(3); // explicit

(As of C++20 we can simply use auto keyword, for abbreviated function

template).

The syntax for templates in C++ is very limited.

(Technically, one could have metaprogramming in C++, by linking the program

to libclang, and have access to the AST of clang in the program, as noted).

5.5. In other languages

Other languages have some for of metaprogramming capabilities, e.g.

- Julia

- has

eval()andquote()and symbols & expressionsdump()see docs for more. - Rust

- see little book of rust macros for more.

- Mojo

- in the works.

- Ruby

Inspired by Lisp, but considered odd:

… can leave you beset with strange and foreign methods, unfamiliar syntax, and downright mystifying blocks of code. It’s true: metaprogramming is a relatively advanced topic. If you want to really dig in and leverage it on a deep level, it will take time, effort, and a different way of thinking than you’re used to.

6. Macros

Programs that write programs? When would you ever want to do that? Not very often, if you think in COBOL. All the time, if you think in Lisp.

Paul Graham - Beating the Averages

When the code has a pattern, we can make the code clearer by abstracting the pattern, by writing a macro that generates it. As we shall see later, this mitigates most of the need for “design patterns”.

6.1. How to write a macro

In order to write a macro, one typically starts with:

- Writing the code we want it to output.

- Write how you want to call/use the macro

- Write the macro that meets 1. & 2.

There are some simple best practice rules to follow, see PCL ch 7 (and look up gensym).

6.1.1. In Common Lisp

A macro in Lisp superficially resembles a function in usage. However, rather than representing an expression which is evaluated, it represents a transformation of the program source code.

Define macro form:

(defmacro name (parameter) "Optional documentation string." body-form)

is similar to define function form

(defun name (parameter) "Optional documentation string." body-form)

A crucial difference between the two:

- Unlike functions, input parameters are not evaluated for a macro.

- Macro runs before executing the code, to re-write the program before compilation/interpreter runs

Note: at macro expansion time one can not access data that will exist at run time, only data available is the source code itself. When Lisp is interpreted, rather than compiled, the distinction between macro expansion time and runtime is less clear because they are temporally intertwined.

6.1.2. In Scheme:

(define-macro (name parameters) body-form) (define (name parameters) body-form)

6.2. Simple macro example: Reverse Lisp

A first and simple example (from PCL, ch. 3), demonstrates how we can make

a new programming language that is the reverse of Lisp. I.e. identical

syntax, except read from right-to-left. First consider the function reverse

that does what the name suggests, when applied on lists:

(reverse '(1 2 3 4)) ;; => (4 3 2 1)

We can now define a macro that transforms regular Lisp to Backwards Lisp (right-to-left evaluation):

(defmacro backwards (expr) (reverse expr))

Now, only post-fix Lisp forms (reversed lists) are valid expressions in our

Backwards Lisp language, that requires we wrap the Backwards Lisp in the

backwards macro (compare how Python wraps valid Python code with the

eval expression: eval("1 + 2")):

(backwards (2 1 +)) ;; => 3

In the above, the (invalid Lisp) expression (1 2 +) is noted to be an

argument to a macro (a /special form/(!)), thus it is not evaluated,

instead it is passed in as un-evaluated data to the macro body that

reverses it to valid regular Lisp, that can then be evaluated.

(macroexpand-1 '(backwards (2 1 +))) ;; => (+ 1 2)

NOTE: Since the compiler will generate identical code for (+ 1 2) and

(backwards (1 2 +)), the efficiency of Backwards Lisp is identical to

normal Lisp.

Macros allow one to define a new syntax, e.g. to use C-syntax in Common Lisp, like with-c-syntax, or lisp With white space indentation, instead of parenthesis.

6.2.1. Notes on macroexpand

Note-1: macroexpand-1 is a function, thus we need to quote the input

data, to prevent evaluation of it

Note-2: the Common Lisp function macroexpand also expands macros of the

language (like do, and, etc), while macroexpand-1 only expands user

defined macro. In Emacs slime-macroexpand-1 (C-c RET on open parenthesis)

expands the code.

6.3. Backquotes / Quasiquote

The backquote / quasiquote operator is useful when writing macros for unquoting. It allows us to insert values in a quoted expression, at program definition time (rather than at run time):

(defvar a 3) '(1 2 a) ; => (1 2 a) `(1 2 ,a) ; => (1 2 3)

Rather than use the literal symbol a, insert value of variable a.

Another useful way to think about the backquote syntax is as a particularly concise way of writing code that generates lists. This way of thinking about it has the benefit of being pretty much exactly what’s happening under the covers – when the reader reads a backquoted expression, it translates it into code that generates the appropriate list structure. For instance,

`(,a b)might be read as(list a 'b). It’s important to note that backquote is just a convenience. But it’s a big convenience.

6.4. Full macro example: Unit testing

Here we demonstrate an actual use case for macros, by developing a unit test framework (based on PCL ch 9). It will consist of expressions, testing a case, and returning a boolean: true if passed, false if failed.

6.4.1. Starting

For example, testing + operator (silly to test built-in, but instructive):

(= (+ 1 2) 3) ;; => T (= (+ 1 2 3) 6) ;; => T (= (+ -1 -3) -4) ;; => T

Or wrap it in a function:

(defun test-+ () (and (= (+ 1 2) 3) (= (+ 1 2 3) 6) (= (+ -1 -3) -4)))

Problem: If one of the three test cases fails, when we run (test-+) will

return NIL, but we will not know which case it was that failed.

6.4.2. Print which case failed

Consider:

(defun test-+ () (format t "~:[FAIL~;pass~] ... ~a~%" (= (+ 1 2) 3) '(= (+ 1 2) 3)) (format t "~:[FAIL~;pass~] ... ~a~%" (= (+ 1 2 3) 6) '(= (+ 1 2 3) 6)) (format t "~:[FAIL~;pass~] ... ~a~%" (= (+ -1 -3) -4) '(= (+ -1 -3) -4)))

The above code reports each test case individually.

For Pythonics, the first line would be equivalent to:

if 1 + 2 == 3: # (expression is code) print("pass") else: print("FAIL") print("1+2 == 3") # (expression is data)

Problem: we risk typo in the code duplication of each case, and we would

like the function as a whole to return T if all cases passed.

6.4.3. Reduce code duplication

First get rid of repeated calls to format (printing function):

(defun report-result (result form) (format t "~:[FAIL~;pass~] ... ~a~%" result form)) (defun test-+ () (report-result (= (+ 1 2) 3) '(= (+ 1 2) 3)) (report-result (= (+ 1 2 3) 6) '(= (+ 1 2 3) 6)) (report-result (= (+ -1 -3) -4) '(= (+ -1 -3) -4)))

However, we’re still repeating the code for each case, risking mislabeling it. Ideally we want to treat the input as both code (evaluate it), and data (to label it). I.e. strong indication we want a macro!

6.4.4. Macro for the win

Ideally, we would want to write:

(check (= (+ 1 2) 3))

and have it mean:

(report-result (= (+ 1 2) 3) '(= (+ 1 2) 3))

so a trivial macro to do the above would be:

(defmacro check (form) `(report-result ,form ',form))

(Where we use backtick / quasiquote, in short: same as normal quote or

except when a symbol is prefixed ,, it substitutes the variable value.)

Reducing the final code to:

(defun test-+ () (check (= (+ 1 2) 3)) (check (= (+ 1 2 3) 6)) (check (= (+ -1 -3) -4)))

However, we have repeated calls to the check macro.

6.4.5. Reducing

We can fix the repeated calls to check, by re-defining the macro to

accept an arbitrary number of arguments:

(defmacro check (&body forms) `(progn ,@(loop for f in forms collect `(report-result ,f ',f))))

which would be used as:

(defun test-+ () (check (= (+ 1 2) 3) (= (+ 1 2 3) 6) (= (+ -1 -3) -4)))

There are several things happening in the code above:

- We used

prognjust to collect each expression in “the same unit block” for execution, and

,@is used for splicing the result of the expressions (must be list). Consider:`(and ,(list 1 2 3) 4) ;; => (AND (1 2 3) 4) `(and ,@(list 1 2 3) 4) ;; => (AND 1 2 3 4)

6.4.6. epilogue

The example in PCL continues with fixing return statement, and also

printing the name of the function (e.g. test-+), and eventually a whole

hierarchy of test functions, with the full path of which call failed.

In a total 26 lines of code(!)

6.5. Conditionals as recursive macros

We saw in Reflections on “Growing a language, that Guy Steel argued:

New functionality added to the language by the user should be indistinguishable from functionality added by the language designers.

In fact, many parts of the core Lisp language is implemented as macros

themselves. This is the case with conditionals like and, or, cond,

etc. If these were implemented as functions, they would not be able to

“short circuit”, i.e. abort evaluating the input arguments once the

inevitable return value of the expression is known.

For example, consider the code below. If (x is 3) then the arguments will

be evaluated to: True, False, and thus last will be left un-evaluated.

(and (= x 3) (> -1 0) (> 2 1))

Had and been a function, each of the three argument would have been

evaluated, before the final result was returned by the and function.

By using macroexpand we can see the and macro generates nested

if-statements:

(macroexpand '(and 1 2 3 4))

expands to:

(if (if 1 (if 2 3)) 4)

worth noting: since everything in Lisp evaluates to true, except NIL (which

is an alias for the empty list), and will either return NIL (if false),

or return the value of the last argument if all are true. In the example

above, and returns 4.

Similarly, for the cond expression in Common Lisp (like switch in

C/C++) is also a recursive macro, building nested if-statements, similar to

Python’s if-elif-else pattern. (For more, see PCL ch 7).

6.6. When to write a macro

In short, where a function will do, don’t use a macro. Macros are used for things functions can not do, such as:

- When we want to treat code as data (e.g. previous example)

- When we want to control (or prevent) evaluation of input arguments, i.e.

operator needs access to parameters before they are evaluated (e.g.

and). - When you need performance by avoiding runtime function calls

- When it significantly improves readability of code, by providing a simple syntax for repeated patterns. Abstraction to clarify intent, as to not have half of the code pattern in the brain.

More on this soon, in Lisp vs other languages, especially the last point above, on how abstractions help move code patterns from the brain to the code.

Note: when we have bugs or crashes from macros, the error message will be from the resulting code the macro generated, not from the macro itself.

See When to use Macros (ch 8) in On Lisp (Paul Graham) for detailed discussion.

6.6.1. Sidetrack: Trivial example i Python

Consider the case where we want to use both the name and the value of the variable that holds the array indices to be used, such that they have different labels in the printed text.

# loop over all three arrays of indices for ind in [train_index, val_index, test_index]: print(f"stratified: {df.iloc[ind].target.sum() / len(df.iloc[ind]):.4f}")

To solve above problem in Python today, we would typically resort to a

dictionary, where we can print both key and use the value. With

Macros, it would be trivial to use both variable name and variable value

in a function.

6.7. 1. Read-time, 2. Compile-time, 3. Run-time

The three big moments in a Lisp expression’s life are read-time, compile-time, and runtime. Functions are in control at runtime. Macros give us a chance to perform transformations on programs at compile-time.

Paul Graham - On Lisp, p. 224 ch 17 (Reader Macro)

There are actually several different kinds of macros. The “normal” macros transform programs at compile time. However, before that, the code is read at read time, which is where we can apply reader-macros, to transform the syntax.

Macros and read-macros see your program at different stages. Macros get hold of the program when it has already been parsed into Lisp objects by the reader, and read-macros operate on a program while it is still text. However, by invoking read on this text, a read-macro can, if it chooses, get parsed Lisp objects as well. Thus read-macros are at least as powerful as ordinary macros.

Paul Graham - On Lisp, p 225 ch 17.1

- Read-time - Reader-macros can reprogram the syntax, before parsing, e.g.

- Syntactic sugar: replace quotes

'<expression>with(quote <expression>) - Replace parenthesis with space indentation: wisp (“white space lisp”)

- C-language syntax in Lisp: with-c-syntax

- Syntactic sugar: replace quotes

- Compile time,

- AST/Transform - Regular macros operate on semantic structure (Lisp objects), and have access to - and can manipulate - its AST

- Compile time - Compile macros can hook into the compile process. Usually just for optimization.